How to build a GPT bot on Discord that generates DallE-3's images

This guide explains how to create a Discord bot capable of:

- Generate images and converse with DALL·E 3 and ChatGPT

- Update quickly with push-to-deploy

- Developed and tested locally

A conversation with an image generation bot

Throught this tutorial we assume that you are already versed with the concept of of tools (also known as functions), if you need an introduction to the topic refer to the concept of a function in LLMs.

Writing our first function

We'll use LaunchKit to build our functions. LaunchKit is designed to simplify the development process of tools for LLMs, handling the nitty-gritty details of mediating the information to and from the LLM, so we can concentrate on the function's core logic.

Before we start, ensure you've got the followings:

- GitHub account

- OpenAI API key

- Discord server where you can invite bots

- Python 3.11 running on your machine, we recommend using PyEnv for managing the local python installation

- PDM the modern Python package manager

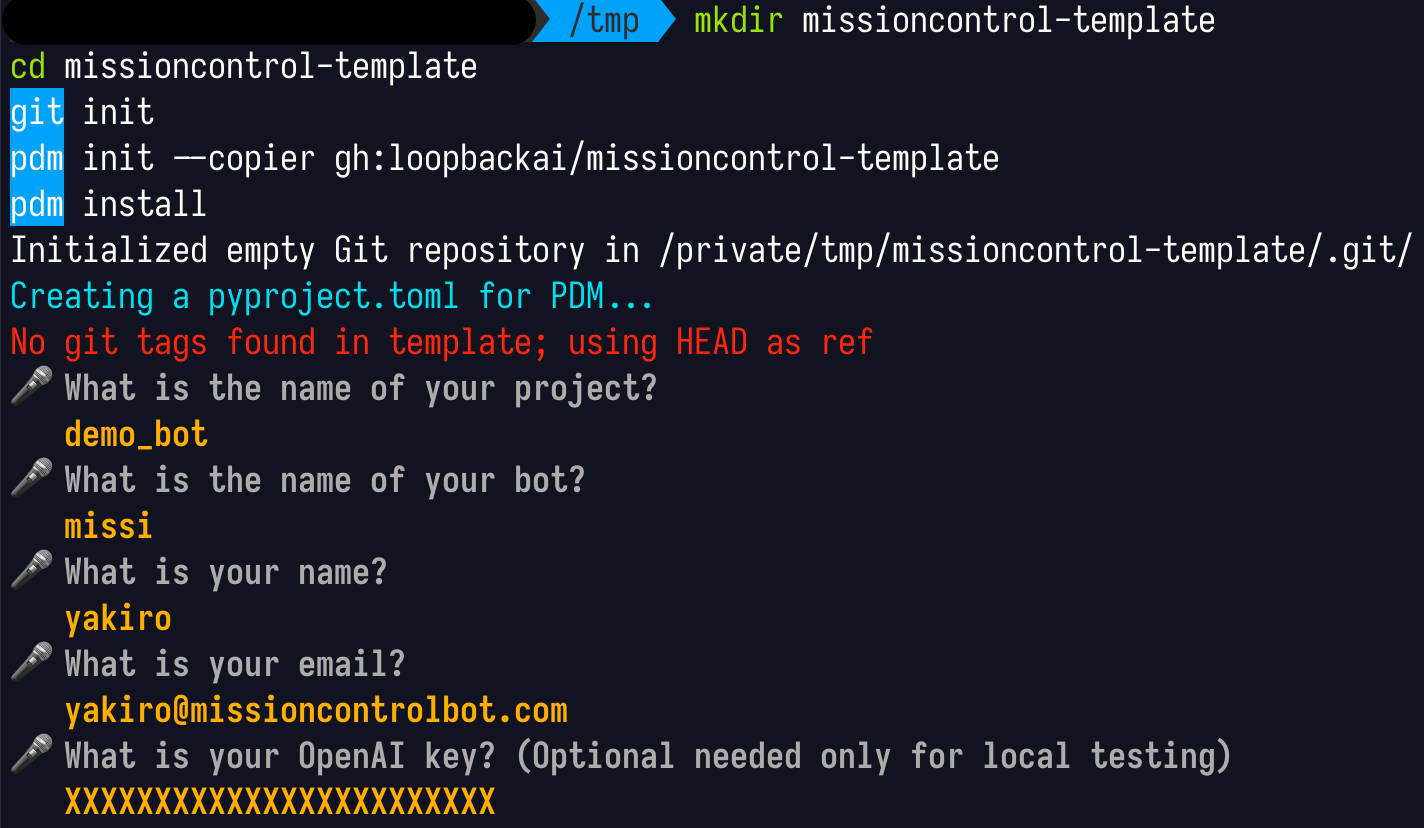

Once you have all the prerequisites installed, run the commands below:

mkdir missioncontrol-template

cd missioncontrol-template

git init

pdm init --copier gh:loopbackai/missioncontrol-template

pdm install

The commands above creates a new folder along with a git repository. It then initialize MissionControl's template within that directory and concludes by installing the necessary dependencies.

Template setup menu

For the purpose of adding a new function that generates an image we only care about a single file for now, actions.py which resides under /src/[project_name]

Open the file with your favorite text editor and let's add a function that generates a new image with DallE-3 based on the user prompt:

import requests

import os

OPENAI_API_KEY = os.environ.get("OPENAI_API_KEY", "")

def generate_image(prompt: str):

"""

Generate an image with DALL·E 3

Parameters:

prompt (str): The text description of the image

"""

response = requests.post(

"https://api.openai.com/v1/images/generations",

headers={

"Content-Type": "application/json",

"Authorization": f"Bearer {OPENAI_API_KEY}",

},

json={

"model": "dall-e-3",

"prompt": prompt,

"n": 1,

"size": "1024x1024",

},

)

if response.status_code != 200:

return {"message": f"image generation failed: {response.text}"}

return {

"message": "image generated",

"imageUrl": response.json()["data"][0]["url"],

}

To ensure this code functions correctly, be sure to add the requests package in your project. You can do this by running:

pdm add requests

generate_image accepts a user's prompt and utilize DALL·E 3 API to generate a corresponding image, and then returns the image URL.

To make our function accessible by the LLM through LaunchKit, we must include it in the list of available functions. This is done by adding generate_image to the actions array:

actions = LaunchKit([launch_rocket,

add_user_to_newsletter,

generate_image])

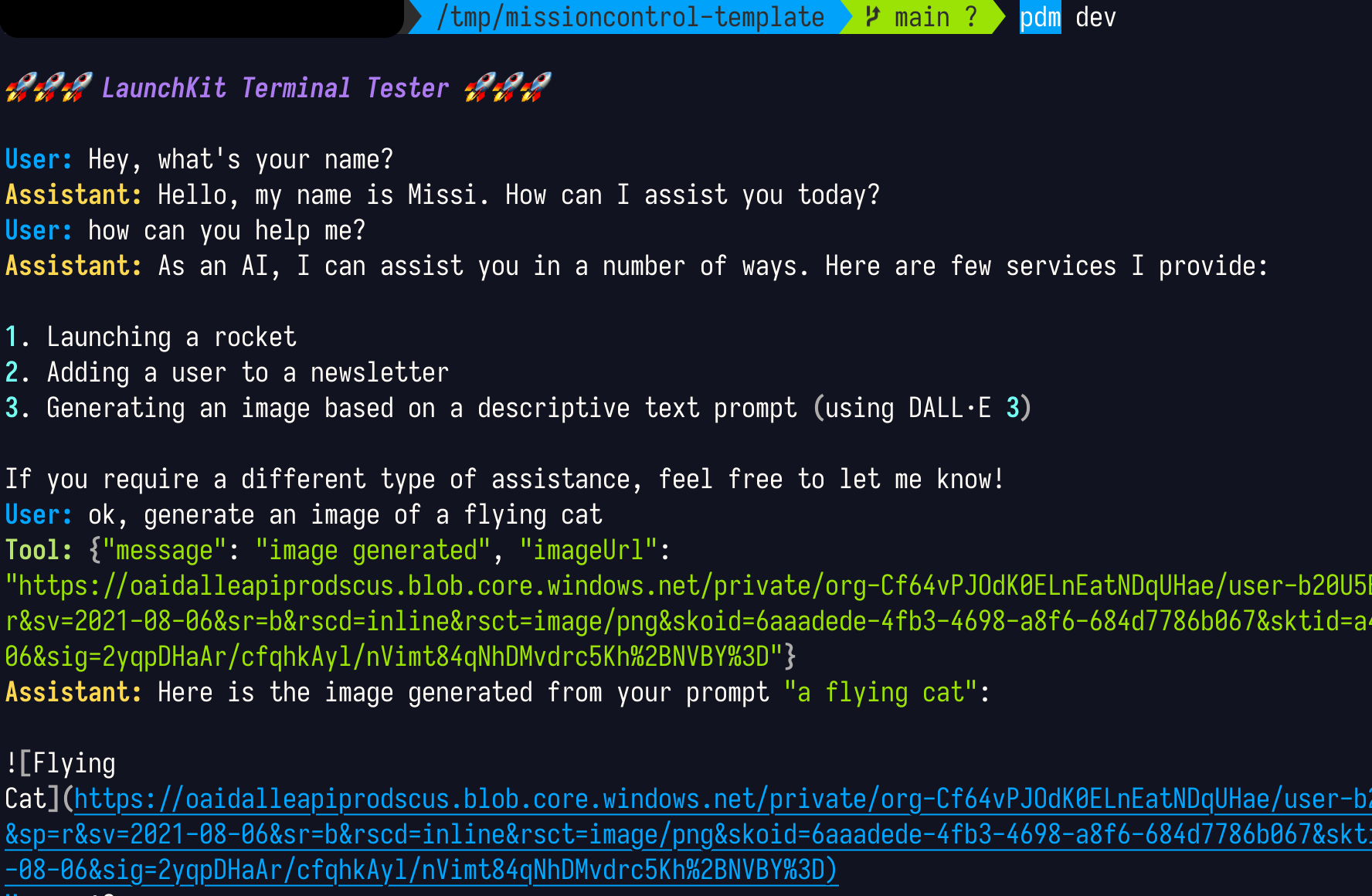

And there you have it – we're all set to test our setup locally. LaunchKit offers convenient command-line tools to test the integration, which allow us to converse with our bot. This ensures that our generate_image function is operating smoothly. You can initiate this test simply by executing:

pdm dev

Here is an example of a short session with the bot:

Local test session

As you can see, the bot is able to engage in general conversation like ChatGPT, and when prompted to create an image it automatically invokes the external function to call DALL·E api, generate the image and form a reply containing the image URL.

Rolling Out Your Chatbot on Discord Using MissionControl

Though testing in the terminal is effective, Our end goal is to have our chatbot available on a platform that you or your users commonly use for communication.

We will deploy our bot using MissionControl, which complements LaunchKit in our toolkit. MissionControl is engineered to enable deployment of bots directly to Discord and many other communication platforms.

Step 1

Begin by creating a bot and invite it to your server Use this guide to navigate the bot creation process. Remember to write down the bot token that was generated as we will need it in the next steps.

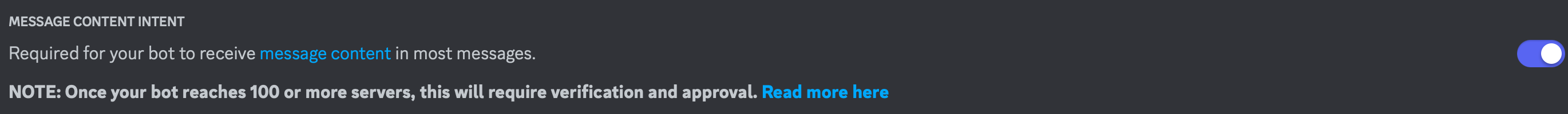

Step 2

Activate the 'Message Content Intent' for your bot to enable it to access message content. This function is needed for the bot to interpret user requests within messages.

Step 3

Next you will need to create a GitHub repository to host the source code of the bot and the functions. You can follow this guide for instructions

Step 4

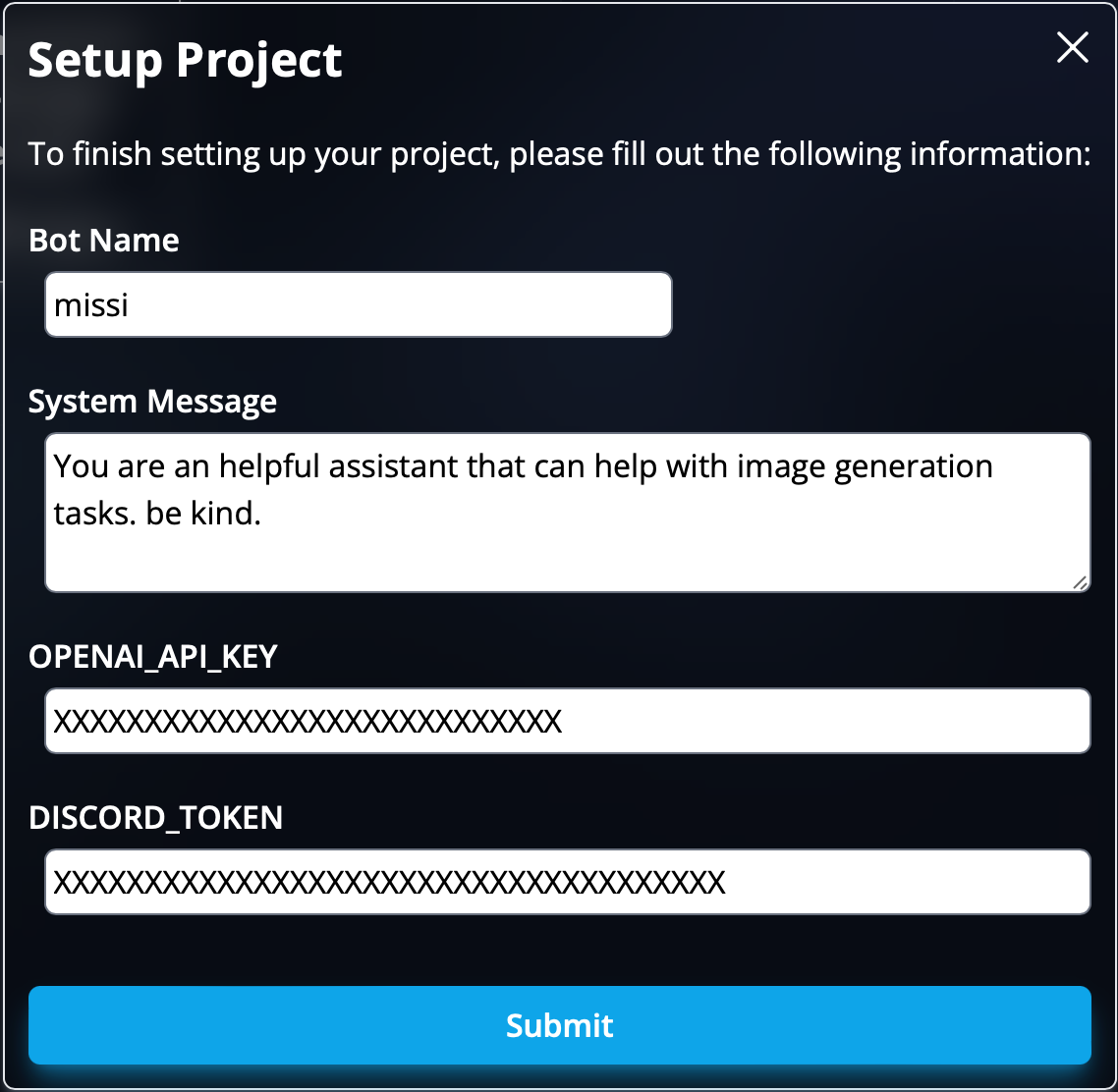

To complete the setup, link MissionControl to your GitHub repository. Use this link to grant the MissionControl app the required permissions to connect with your repository. Throughout the project setup process, you will be prompted to enter details such as your bot’s name and system messages. Most importantly, be sure to provide the secret tokens for both Discord and OpenAI.

MissionControl project setup screen

MissionControl project setup screen

And that's it, MissionControl will auto-deploy your chatbot with each repository update.

Here are few ideas for improvements that you can play with:

- Generate multiple variations of images for each prompt, by changing the

nparameter - Create another function that upscales the chosen image, check stability.ai api.

Conclusion

In this tutorial we covered the process of building and deploying a custom Discord bot that integrates the advanced capabilities of GPT and DALL·E 3. We began by understanding the function of tools in LLMs and moved through the steps of writing, testing, and deploying our function using LaunchKit and MissionControl. By setting up the necessary tools and following a few simple steps, we leveraged these powerful frameworks to deploy a bot that can generate images from user prompts and update automatically with every change to the master branch. This not only enhances the user experience on Discord but also showcases the seamless integration of AI-driven functionalities within popular communication platforms.

Appendix

Exploring the Concept of a Function (Tool) in Large Language Models (LLMs)

In the realm of Large Language Models (LLMs), a tool serves as a means to 'amplify' the capabilities of an LLM beyond what's already built-in. The core concept revolves around empowering the LLM to engage with an external tool—often via an API. This enables it to carry out operations or gather information that wasn't originally within its scope.

Such functionality unlocks a world of intriguing possibilities: imagine crafting a bespoke chatbot that ventures out to arrange your appointments, handle routine administrative tasks, or create both images and text concurrently (a process we're about to explore). With the rising interest in this functionality, many of today's sophisticated LLMs offer tool integration features, including OpenAI's ChatGPT, Anthropic's Claude, and even Meta's LLaMA, each bringing this exciting potential to the table.

At its essence, the process of a Large Language Model (LLM) utilizing a tool doesn’t involve the LLM directly invoking anything. Instead, the LLM works with a predetermined definition of the tool to produce a structured request for tool use. From there, it's the job of the underlying runtime framework to act. It interprets this structured request, executes the external call to the tool, and then delivers the outcome back to the LLM so that it can craft an appropriate response for the user.

Can it work with other image generations frameworks?

Yes, if you're able to connect with the image generation API through Python, the approach should be effective and the procedure quite alike. However, certain interfaces may present more complexity. For instance, using Stability's SDXL API might necessitate that you first host the generated image externally before providing the user with its URL.

Can it work with other LLMs?

In our tutorial, we utilized LaunchKit which natively supports OpenAI's ChatGPT, but can be conveniently expanded to accommodate other LLMs. Broadly speaking, replicating this process with any LLM that comprehends a JSON schema should be relatively straightforward.